I'm already running SonarQube on K8s. But I took a shortcut there using hostpath persistent storage. So the pod must run on a specific node, this is suboptimal. Since I still have my Synology I decided to try using this as a NFS server for the SonarQube storage. First thing is to enable this on the Synology:

And creating a shared folder for the data:

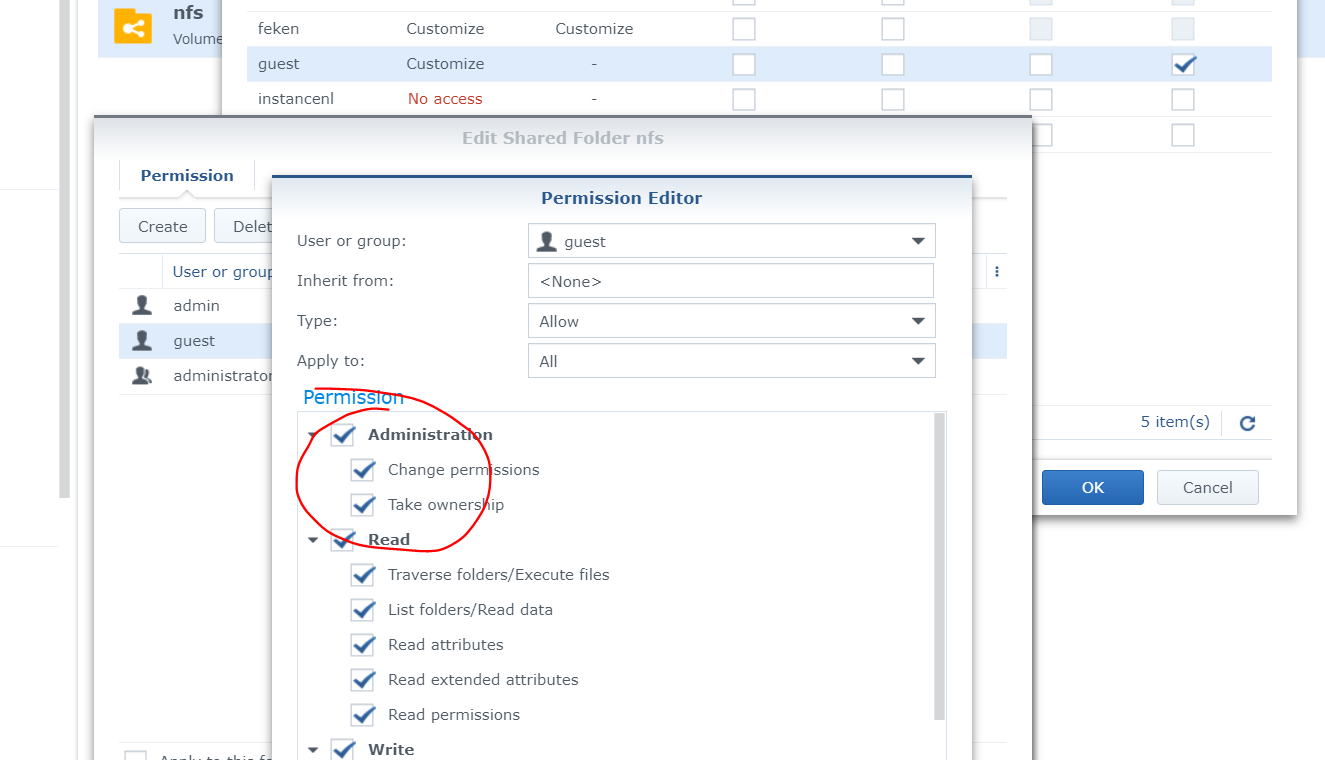

One important thing to notice here that you should not use squash. SonarQube tries to set permissions with chown on startup and this fails if you are using squash. Also the user guest should have full permissions to do so (this is not the default):

Now there are numerous ways to get NFS working on K8s, and they all are different. I guess this is due to it being a moving target and the ways to get this working are different for different versions. I'm running on MicroK8s 1.19 which is fairly new, so I tried to the simplest solution I could find. Which is:

First install nfs-common on all nodes:

sudo apt install nfs-common

Creating a PV:

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-sonar

spec:

storageClassName: ""

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

nfs:

server: 192.168.1.2 # ip addres of nfs server

path: "/volume1/nfs/sonar" # path to directoryA PV Claim:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-sonar

spec:

storageClassName: ""

accessModes:

- ReadWriteMany

resources:

requests:

storage: 8GiAnd changing my SonarQube deployment to this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: sonarqube

spec:

replicas: 1

selector:

matchLabels:

name: sonarqube

template:

metadata:

name: sonarqube

labels:

name: sonarqube

spec:

containers:

- image: sonarqube:latest

name: sonarqube

ports:

- containerPort: 9000

name: sonarqube

volumeMounts:

- name: nfs-sonar

mountPath: /opt/sonarqube/data

volumes:

- name: nfs-sonar

persistentVolumeClaim:

claimName: nfs-sonarAnd it works! This Deployment isn't tied to a specific node anymore.